Scraping and Processing the Data of a Christian Sonic AMV YouTube Channel

Scraping and processing the data of a YouTube channel is surprisingly simple, and I did it on one entirely dedicated to Christian Sonic AMVs.

This blogpost was originally written on January 25, 2026, before I started working on Wolf with a Blog.

So, I decided to take on a neat coding exercise with real-world application. I have been interested in this YouTube channel entirely dedicated to Sonic AMVs of contemporary Christian music, and if you thought this was going to be the "Christian Sonic fanart" phenomenon of the 2000s to early 2010s, it's primarily based on the Sonic the Hedgehog movies. It's called Warriors of God.

Yes, I'm an Atheist. You're talking to someone who decided to read all of the Christian children's chapter book series The Dead Sea Squirrels and write articles about it on the Villains Wiki (spoiler warnings for the links; I was disappointed the books weren't funny to me and it didn't help the author is literally the co-creator of VeggieTales, but that's off-topic). I'm interested in how Christians implement their faith in modern-day, creative mediums.

(Dig that Movie Excalibur Sonic!)

I don't watch Warriors of God videos often, since I'm not a CCM listener or AMV watcher (and they do lots of slow songs, which means it's a gamble for my vibes), but I stay tuned for their art postings (they're a good artist, also) and non-AMV updates. I like to see where they go.

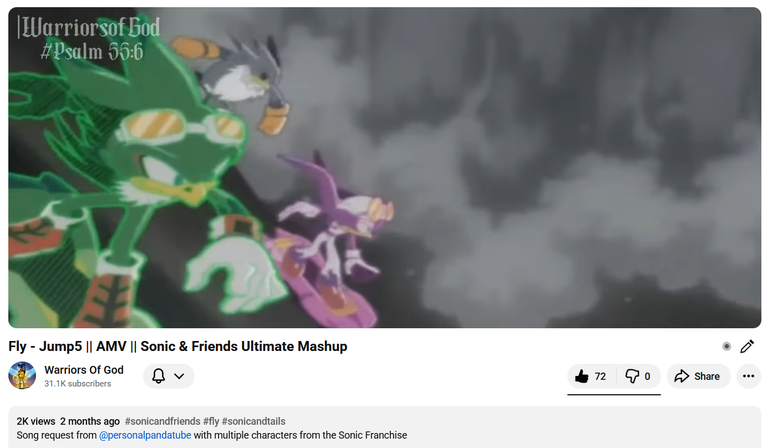

Warriors of God even did a request of mine, for "Fly" by Jump5. (If you're into 2000s Disney, you gotta know Jump5.) Jump5 and that they were a Christian teen pop band is why I'm interested in CCM, and subsequently Warriors of God. Okay, I did choose a more obscure song of an older group, hence the low view count, but it's still good. (The request was more grandiose, with every Fly-type character and original art, but they settled for a more modest video with a general fly theme.) I can't embed the video, so here's the link instead.

Warriors of God accepts requests for free from anyone, and this does get overwhelming. (They currently only accept birthday requests.) I suggested to them a spreadsheet of videos they've made, as well as pending requests, a while ago in this comment on a video of theirs, so they could keep track of what was and will be done. (I found my comment while looking randomly through the final result of this exercise. You'll see.) They never responded, but it would be still of the Lord's service to provide help unsolicited. (They're a devout believer.)

So, for my exercise, I decided to practice web scraping and data processing by obtaining and formatting metadata of Warriors of God's AMVs, so that they can have a database. (If they already have one, then I still practiced.)

When reading up on preparation the night before, I learned that I wouldn't be using the Python libraries Beautiful Soup or Selenium; my best bet would be yt-dlp's Python library. Yes, yt-dlp is used to download videos, but it can also extract metadata.

I whipped up a quick Python script that downloads all the channel's videos (except for shorts) using the URL "https://www.youtube.com/@WarriorsOfGod44/videos". It wasn't complicated; just use the YoutubeDL class with the options dictionary as parameters and run the one-line download function with the URL. I used the options skip_download and dump_single_json, hoping it would dump an actual JSON file.

I first ran it normally. It wasn't instant, but still quick for about 300 videos, until I got rate limited. I set the sleep interval for requests (since the normal sleep interval option only works for actual video downloads) to 7 seconds, the sweet spot between the recommend 5 to 10. This took about four hours.

After passing time such as playing Fortnite (preparing in case the full Sonic collab rumors are true), the script ended up dumping the entire JSON string into the terminal and not saving it as a file. Time. Wasted.

I discovered that the yt-dlp Python library has a better function for extracting metadata as a JSON, extract_info(). Using their example code as a base, I cooked this up as a test with a short playlist:

# The following script scrapes the metadata of the provided playlist and each of

# its videos and saves it as a JSON.

# (Credit to yt-dlp's GitHub README for the starter code!)

import json

import yt_dlp

import os

playlist_url = "https://www.youtube.com/playlist?list=PL_qAnxUn4IpL_Bxc_yjKHbGLeFo8mHTcT" # "Tails Tributes" playlist, FYI

ydl_opts = {

'sleep_interval_requests': 5

}

with yt_dlp.YoutubeDL(ydl_opts) as ydl:

info = ydl.extract_info(playlist_url, download=False)

curr_dir_path = os.path.dirname(os.path.realpath(__file__))

with open(os.path.join(curr_dir_path, 'scraped_info.json'), "w") as json_file:

json_file.write(json.dumps(ydl.sanitize_info(info)))I was able to get metadata for each video in the playlist and practice writing scripts that would extract the data and organize and format them in CSV files. However, as said before, regular sleep intervals don't work for extracting data, and I'm not willing to wait several more hours.

Claude Sonnet 4.5 in GitHub Copilot recommended that I use the official YouTube data API, for I could get the job done in a few seconds. (yt-dlp was just recommended for being the most user-friendly.) I discovered YouTube's documentation for Python implementation, installed the required libraries, signed up for the API, and got down to work.

It was confusing at first. Using the username only didn't work, so I had to use the channel ID for obtaining the channel metadata, and subsequently the playlist ID for the uploads. Then, I got stuck on obtaining the uploads' metadata; the documentation article didn't provide Python code and the API Explorer's code didn't work. Braindead from burnout (those with ADHD can relate; hyperfocus…) and thinking I wouldn't get better code elsewhere, I asked Claude Sonnet 4.5 in Copilot, and it provided me code that actually worked. I did some revisions and reviewed the article's code for other programming languages to see if this was legitimate.

Basically, you can only get metadata from one page of the uploads at a time, in which you add to the overall array as dictionary objects. You loop with the next page's token each time until there isn't one. Then, you dump all the text into a JSON file. (This DEV post by Bernd Wechner helped me with using the CSV library's DictWriter() class.)

# This script makes requests to the YouTube data API to obtain metadata of each

# of the channel's uploads and stores the metadata in a JSON file

# Original code from: https://developers.google.com/youtube/v3/docs/channels/list

import os

import googleapiclient.discovery

import json

def main():

# Prepare access to YouTube API

# Disable OAuthlib's HTTPS verification when running locally.

# *DO NOT* leave this option enabled in production.

os.environ["OAUTHLIB_INSECURE_TRANSPORT"] = "1"

api_service_name = "youtube"

api_version = "v3"

DEVELOPER_KEY = "[YOU ARE NOT GETTING MY API KEY]"

youtube = googleapiclient.discovery.build(

api_service_name, api_version, developerKey=DEVELOPER_KEY)

# Prepare obtaining videos

videos = []

next_page_token = None

# Go through all pages

while True:

# Request a page of the uploads

uploads_request = youtube.playlistItems().list(

part="snippet,contentDetails",

playlistId='UUbUlyGnA51jmCr7t4E5WlHQ',

maxResults=50,

pageToken=next_page_token

)

uploads_data = uploads_request.execute()

# Add to videos

videos.extend(uploads_data['items'])

# Proceed to next page if there's any

next_page_token = uploads_data.get('nextPageToken')

if not next_page_token:

break

# Dump and write to JSON file

with open('data_from_api.json', "w", encoding='utf-8') as json_file:

json.dump(videos, json_file, indent=2, ensure_ascii=False)

if __name__ == "__main__":

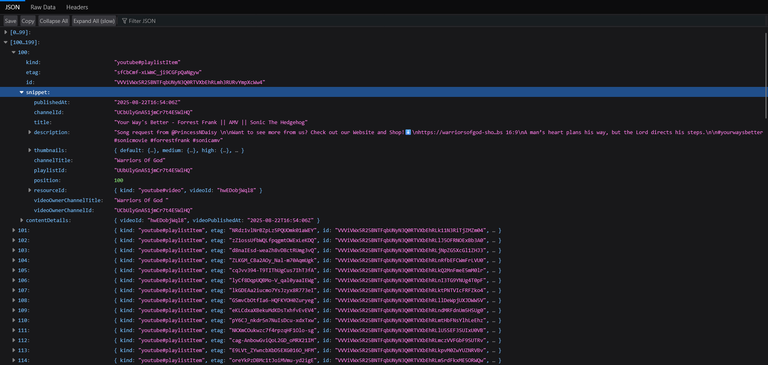

main()The JSON looks like this when opened in Firefox, which has a good JSON viewer:

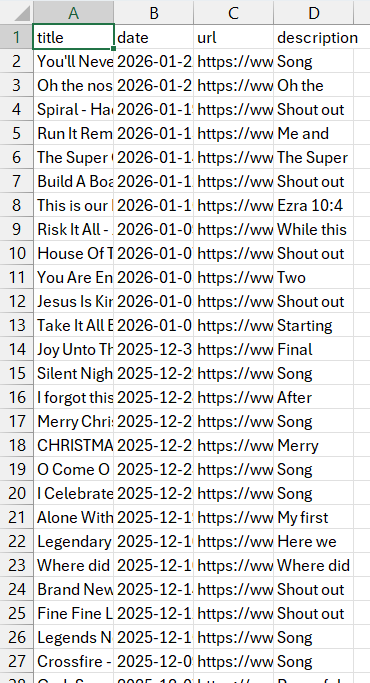

You've got the title, publish date, video ID, and description for each video. That's what we need.

We then extract such metadata from each entry of the JSON file as a dictionary object in an array, with each value with its key, and write each dictionary as a row in a CSV file.

# This script creates a roughly polished CSV file from extracting and organization

# information from the JSON file

import json

import csv

# Open JSON file

with open('data_from_api.json', encoding='UTF-8') as json_file:

# Turn file data into Python object

data = json.load(json_file)

# Prepare Python array of video dictionary objects

videos = []

# Extract data for each video

for vid_data in data:

snippet = vid_data["snippet"]

videos.append({

"title": snippet["title"],

"date": snippet["publishedAt"],

"url": "https://www.youtube.com/watch?v=" + snippet["resourceId"]["videoId"],

"description": snippet["description"]

})

# Write to CSV file

with open('parsed_info_initial.csv', 'w', encoding='UTF-8', newline='') as csv_file:

writer = csv.DictWriter(csv_file, fieldnames=None)

# Write header

writer.fieldnames = videos[0].keys()

writer.writeheader()

# Write rows

for video in videos:

writer.writerow(video)

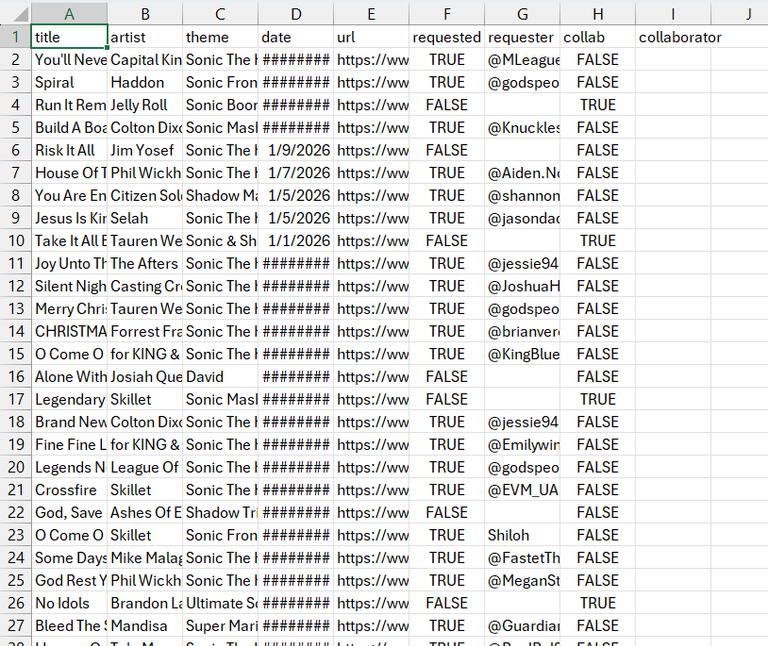

Now it's time to process the CSV file and format the data so that for each AMV, we have the song title, artist's name, theme, a spreadsheet software-compatible date, whether the video was requested, the requester's handle, and whether the video is a collab. Non-AMV videos are cut out as well. We rely on regular expressions, in which we write patterns for the program to detect matching phrases in text. Claude Sonnet 4.5 in Copilot was good for providing the patterns for description phrases indicating the videos were requests (see, this is what AI should be used for!), as well as Regex parts that I tested and learned about in regex101.

For each row, it is imported as a dictionary and its values are processed so that values for a new dictionary are obtained, having the information we need. Then, the new dictionaries are all written to another CSV file.

# This script constructs a nearly finished CSV file by analyzing each video's data

# in the initial CSV file with Regex.

import csv

import re

def regex_title(title):

patterns = [

r"^(.+?) - (.+?)\s*\|\| (.+?) \|\| (.+?)$",

r"^(.+?) - (.+?)\s* \(([^)]+)\) \[(.+?)\]$"

]

# Detect if title matches a pattern

for pattern in patterns:

match = re.match(pattern, title)

if match:

return {

"title": match.group(1).strip(),

"artist": match.group(2).strip(),

"type": match.group(3).strip(),

"theme": match.group(4).strip()

}

return None

def regex_description(description):

patterns = [

r"[sS]hout out to (\S+) for requesting",

r"[sS]ong [rR]equest from (\S+)",

r"[Ss]hout out and [Hh]appy [Bb]irthday to (\S+)",

r"[Ff]inal [Rr]equest (?:for this year )?from (\S+)"

]

# Detect if description has a phrase that matches a pattern

for pattern in patterns:

match = re.search(pattern, description)

if match:

return {

"requested": True,

"requester": match.group(1)

}

return {

"requested": False,

"requester": None

}

def process_date(date):

# Reformats the date string

pattern = r"^([0-9]{4})-([0-9]{2})-([0-9]{2})"

match = re.match(pattern, date)

if match:

return f"{match.group(2)}/{match.group(3)}/{match.group(1)}"

# Open CSV file

with open('parsed_info_initial.csv', encoding='UTF-8', newline='') as int_csv_file:

# Prepare Python array of dictionaries for initial data

videos_int = []

videos = []

# Read each row and extract it as a dictionary

reader = csv.DictReader(int_csv_file)

for row in reader:

videos_int.append(row)

for video in videos_int:

# Get info from processing title

title_info = regex_title(video["title"])

if not title_info:

# Skip because it's not an AMV (or a short)

continue

# Get info from processing description

request_info = regex_description(video["description"])

# Detect if video is a collab

if "Collab" in title_info["type"]:

collab = True

else:

collab = False

videos.append({

"title": title_info["title"],

"artist": title_info["artist"],

"theme": title_info["theme"],

"date": process_date(video["date"]),

"url": video["url"],

"requested": request_info["requested"],

"requester": request_info["requester"],

"collab": collab,

"collaborator": "" # This is hard to detect automatically without advanced NLP

})

# Write to new CSV file

with open('parsed_info_final.csv', 'w', encoding='UTF-8', newline='') as fin_csv_file:

writer = csv.DictWriter(fin_csv_file, fieldnames=None)

# Write header

writer.fieldnames = videos[0].keys()

writer.writeheader()

# Write rows

for video in videos:

writer.writerow(video)(The Regex is not perfect, which means there are going to be errors here and there. For example, while videos follow the general title pattern, such as "[title] – [artist] || [type] || [theme]", which makes the Regex implementation easy, some have errors, such as this video called "I Celebrate The Day || Relient K || AMV || Sonic The Hedgehog". And that's their only Relient K video, and it's their slow song; Relient K is Christian pop punk, and pop punk fits Sonic to the tee- Okay, that's going on a tangent. Fortunately, the data can be edited easily, especially in the final spreadsheet form.)

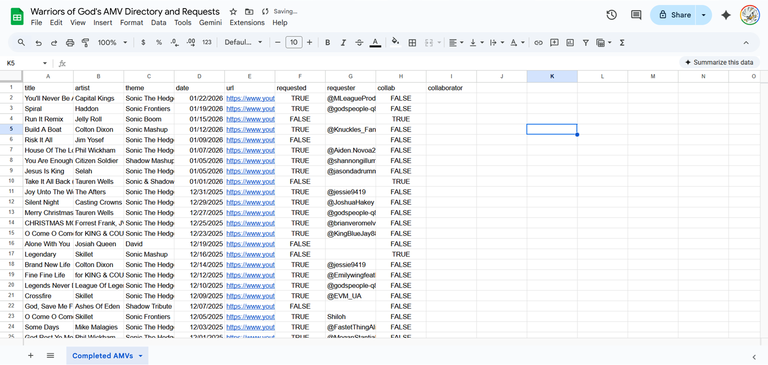

We now have a CSV file that's ready to take life as a more polished spreadsheet! Here it is in Google Sheets (thanks Google for providing online platforms for office-related files, and making them free!):

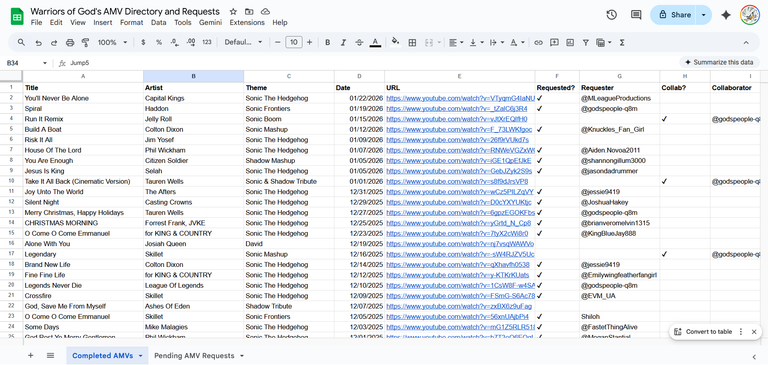

Here it is then re-formatted for visual organization. I changed the "TRUE"/"FALSE" values to either checkmarks or none so it doesn't look busy, as well as filled in the collaborator's name since there are only a few collab videos with one collaborator (who also does Christian Sonic AMVs). Also, the column widths are in multiples of 20s.

I've also added other sheets for pending requests and credits (crediting Warriors of God and myself).

I'm now done. As of this writing, I'm waiting for Warriors of God to post a new video so I can share it with them and see if they'll be impressed or not, and if they'll thank God (literally; again, they're devout) for my efforts. (They tend to only respond to the comments of the newest video for the first several hours.) While I'm at it, the spreadsheet is publicly available here (yes, I changed "Directory" to "Database" because that's more accurate).

That was a fun introduction to web scraping and data processing with real-world application. It was basic, but that's what an introduction is supposed to be! Oh, and thank you for reading the first post of my new main blog, Wolf with a Blog. Stay tuned for more writings on computer science and potentially other topics! (The blogpost on the migration of Eleventy is still planned. In fact, it will be the next blogpost.)

Edit as of January 26, 2026: Warriors of God finally released a new video, and I shared my spreadsheet with them. It took some fumbling, as YouTube deletes some comments with links in them, but I gave them the edit privileges when they gave their email address. Their reaction was "Got to hand it to you, this is very Impressive [sic]".

I succeeded.